- The Critical Role of Cache Memory in Modern Computing: Enhancing Speed, Efficiency, and System Performance

- Understanding Cache Memory: A Comprehensive Overview

- Key Benefits of Cache Memory:

- Types of Cache Memory and Their Specific Applications

- How Cache Memory Enhances System Performance: Technical Insights

- Challenges and Considerations in Cache Memory Management

- Advanced Cache Technologies: The Future of Cache Memory

- Case Studies: Real-World Applications of Cache Memory

- Advanced Metrics for Evaluating Cache Memory Performance

- Conclusion: The Future of Cache Memory Technology

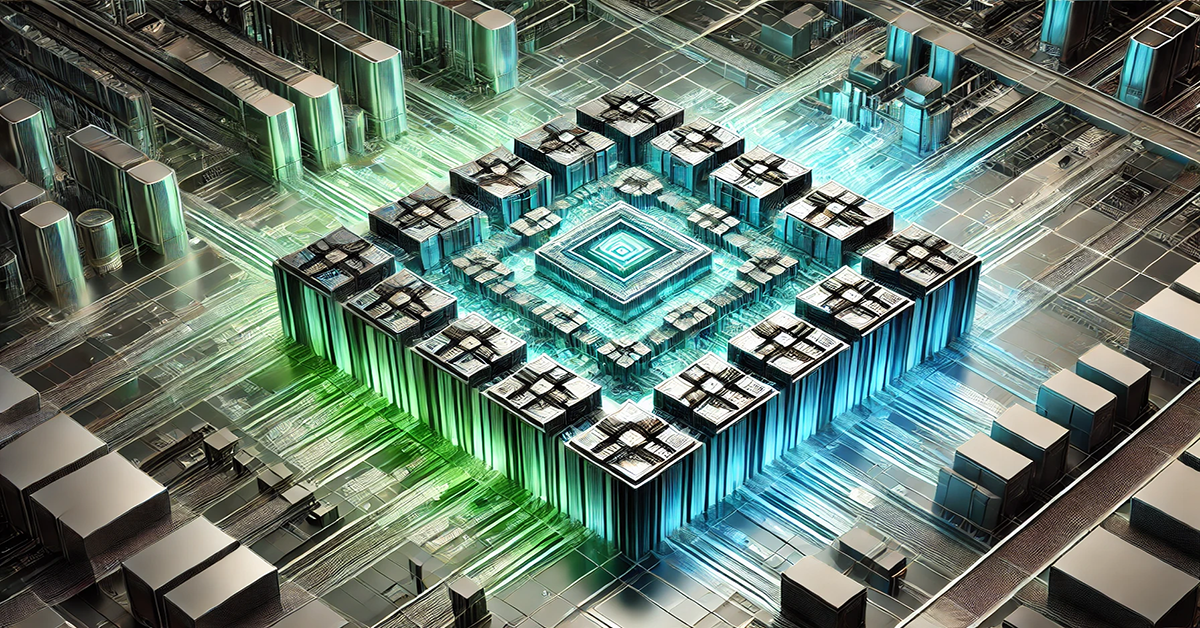

The Critical Role of Cache Memory in Modern Computing: Enhancing Speed, Efficiency, and System Performance

In the dynamic and rapidly evolving field of information technology, the relentless pursuit of greater computational speed and efficiency remains at the forefront of innovation. Among the various components that contribute to this ongoing evolution, cache memory emerges as a pivotal element in the architecture of modern computing systems. Despite its often understated presence in discussions about hardware and software, cache memory plays an indispensable role in ensuring the smooth and rapid operation of both personal and enterprise-level computing devices.

This article provides an in-depth exploration of cache memory, examining its fundamental importance, various types, and how it optimizes performance across diverse computing environments. Additionally, the article adheres to SEO best practices, ensuring that the content is both informative and easily discoverable.

Understanding Cache Memory: A Comprehensive Overview

Defining Cache Memory and Its Functionality

Cache memory is a specialized, high-speed storage layer that serves to bridge the gap between the extremely fast processing speeds of the CPU (Central Processing Unit) and the comparatively slower speed of main memory or other storage devices. It temporarily stores copies of frequently accessed data and instructions, enabling quicker retrieval and reducing the time and energy required for data processing tasks. Cache memory is typically implemented as part of the CPU architecture or as a standalone component within the system. Its primary function is to enhance computational efficiency by minimizing the time the CPU spends waiting for data to be retrieved from slower storage.

Cache memory operates based on the principle of locality, which can be divided into two categories: spatial locality and temporal locality. Spatial locality refers to the tendency of a program to access data locations that are close to each other within a short period of time. Temporal locality, on the other hand, refers to the tendency of a program to access the same data location repeatedly within a short time frame. By leveraging these principles, cache memory effectively anticipates the data that the CPU is likely to require, thereby preemptively storing it for quick access.

The Architectural Placement and Hierarchy of Cache Memory

The architecture of cache memory is typically organized into multiple levels, each with distinct characteristics in terms of size, speed, and proximity to the CPU:

- Level 1 (L1) Cache:

The L1 cache is the smallest and fastest level of cache memory, residing directly within the CPU cores. It is typically divided into two separate caches: one for data (L1D) and one for instructions (L1I). The L1 cache is designed to deliver data to the CPU at speeds matching the processor’s clock cycles, minimizing latency and maximizing processing efficiency. Due to its limited size, the L1 cache stores only the most critical data and instructions that are likely to be used immediately by the CPU. - Level 2 (L2) Cache:

Located slightly further from the CPU cores than the L1 cache, the L2 cache is larger in size but slower in speed. It serves as an intermediary between the fast L1 cache and the slower L3 cache or main memory. The L2 cache stores additional data and instructions that may not fit in the L1 cache but are still required frequently enough to warrant quick access. In many modern CPUs, the L2 cache is also divided into separate caches for data and instructions. - Level 3 (L3) Cache:

The L3 cache, often shared among multiple CPU cores, is larger and slower than both the L1 and L2 caches. It acts as a last-level cache (LLC) before the data is fetched from the main memory (RAM). The L3 cache is crucial for storing data that is accessed less frequently but still needs to be retrieved quickly to avoid performance bottlenecks. The size of the L3 cache can vary significantly depending on the CPU architecture, with some high-performance processors featuring L3 caches that are several megabytes in size. - L4 Cache and Beyond:

In some high-end computing systems, particularly those used in server environments or specialized applications, an additional level of cache, known as L4, may be implemented. L4 cache is typically larger and slower than L3 and may reside outside the CPU die, integrated within the system memory or on a separate chip. It provides an additional layer of caching to further reduce latency in data retrieval.

The Importance of Cache Memory in Modern Computing Systems

Cache memory’s role in modern computing systems cannot be overstated. It is fundamental to the efficient operation of virtually all computational processes, from basic everyday tasks to highly complex scientific simulations. The significance of cache memory lies in its ability to enhance system performance by reducing the time it takes for the CPU to access frequently used data and instructions. This reduction in access time is critical for optimizing the execution of tasks that require high processing speeds and for improving the overall user experience.

Key Benefits of Cache Memory:

- Reduced Latency:

By storing frequently accessed data close to the CPU, cache memory significantly reduces the latency associated with data retrieval. This is particularly important in time-sensitive applications, such as real-time data processing, gaming, and high-frequency trading, where even microseconds of delay can impact performance. - Increased Throughput:

Cache memory improves system throughput by allowing the CPU to process more instructions in a given period of time. By reducing the time spent waiting for data to be retrieved from slower storage, the CPU can execute more operations, leading to higher overall system efficiency. - Enhanced Energy Efficiency:

Accessing data from cache memory requires less energy than fetching it from main memory or disk storage. This reduction in energy consumption is crucial for mobile devices, where battery life is a primary concern, as well as in data centers, where energy efficiency directly impacts operational costs. - Improved User Experience:

For end-users, cache memory contributes to faster application load times, smoother performance, and a more responsive computing experience. Web browsers, for example, use cache to store copies of web pages and media files, enabling quicker access to frequently visited sites and reducing bandwidth usage.

Types of Cache Memory and Their Specific Applications

Cache memory is not a monolithic entity; it comes in various forms, each designed to address specific performance needs within the computing architecture. Understanding these different types of cache memory is essential for comprehending how they collectively contribute to the overall efficiency of modern computing systems.

1. CPU Cache Memory

As mentioned earlier, CPU cache memory is typically organized into multiple levels (L1, L2, and L3), each serving a unique function in enhancing processor performance. CPU cache is crucial for minimizing the time it takes for the CPU to access data and instructions that are frequently used during the execution of programs.

- Instruction Cache (I-Cache):

The instruction cache stores copies of the instructions that the CPU is likely to execute next. By keeping these instructions close to the processing cores, the CPU can quickly fetch and execute them, reducing the time spent waiting for instructions to be loaded from slower memory. - Data Cache (D-Cache):

The data cache stores the data that the CPU needs to execute instructions. This includes variables, temporary results, and other data structures that are frequently accessed during program execution. By storing this data in the cache, the CPU can avoid the latency associated with accessing it from main memory.

2. Disk Cache Memory

Disk cache, also known as buffer cache, is used to store copies of data that have been recently read from or written to disk storage. This type of cache is typically implemented in RAM, providing a much faster alternative to retrieving data directly from the disk. Disk cache is especially important for operating systems and applications that require frequent disk access, such as file systems, databases, and virtual memory systems.

- Read Cache:

Read cache stores copies of data that have been recently read from the disk. By keeping this data in a fast-access cache, the system can avoid repeated reads from the slower disk, significantly improving read performance. - Write Cache:

Write cache temporarily holds data that is being written to the disk. This allows the system to complete write operations more quickly, without having to wait for the data to be physically written to the disk. The data is then written to the disk during periods of low system activity, minimizing the impact on overall performance.

3. Web Cache Memory

Web cache is a specialized type of cache used by web browsers, proxy servers, and content delivery networks (CDNs) to store copies of web pages, images, and other media content. By caching this content, web servers can reduce the load on their infrastructure and improve the speed at which users can access frequently visited websites.

- Browser Cache:

The browser cache stores copies of web pages and other media content on the user’s device. This allows the browser to quickly load previously visited sites without having to download the content again from the web server. Browser cache is particularly useful for reducing bandwidth usage and improving the user experience on slow or metered internet connections. - Proxy Cache:

Proxy servers use cache to store copies of web content for multiple users. By caching this content, the proxy server can reduce the load on the web server and improve access speeds for all users who access the same content. Proxy cache is commonly used in enterprise environments, where multiple users access the same web resources. - Content Delivery Network (CDN) Cache:

CDNs use distributed caches to store copies of content at various points in their network, close to the end-users. This reduces the distance that data must travel, resulting in faster content delivery and reduced latency. CDNs are essential for delivering high-performance web services, especially in geographically diverse environments.

How Cache Memory Enhances System Performance: Technical Insights

The performance benefits of cache memory are rooted in its ability to reduce latency, increase throughput, and optimize the overall efficiency of computing systems. These benefits are achieved through a combination of architectural design, data management strategies, and intelligent algorithms that govern how data is stored, retrieved, and managed within the cache.

1. Efficiency in Data Retrieval: Minimizing Latency and Miss Rates

Cache memory is designed to be the first point of access for data requested by the CPU or other system components. When a data request is made, the system first checks the cache memory to see if the data is already available—a process known as a cache lookup. If the data is found in the cache (a cache hit), it is retrieved quickly, avoiding the need to access slower storage. If the data is not found in the cache (a cache miss), it is retrieved from the primary storage and subsequently stored in the cache for future requests.

- Cache Hit Rates:

The effectiveness of a cache memory system is often measured by its cache hit rate—the percentage of data requests that are successfully fulfilled by the cache. A high cache hit rate indicates that the cache is effectively storing the most frequently accessed data, reducing the need for slower data retrieval operations. - Cache Miss Penalty:

When a cache miss occurs, the system incurs a penalty in terms of increased latency and reduced performance. This penalty is determined by the time it takes to retrieve the data from primary storage and the additional overhead of updating the cache. Minimizing cache miss rates is therefore critical for maintaining high system performance.

2. Reducing Latency: The Role of Cache in Performance-Critical Applications

Latency is a critical factor in the performance of many computing applications, particularly those that require real-time data processing or involve complex computational tasks. Cache memory plays a vital role in reducing latency by providing a fast-access storage layer that can deliver data to the CPU in a fraction of the time required by main memory or disk storage.

- Gaming and Graphics Processing:

In gaming and graphics-intensive applications, the need for rapid data access is paramount. Cache memory ensures that the CPU and GPU (Graphics Processing Unit) can quickly retrieve the data needed to render high-resolution images, process physics simulations, and execute complex algorithms in real-time. This reduces frame drops, improves visual quality, and enhances the overall gaming experience. - High-Frequency Trading (HFT):

In the financial sector, high-frequency trading relies on the ability to execute trades within microseconds, based on real-time market data. Cache memory is crucial for storing and retrieving this data at the speeds required to maintain a competitive edge in the market. By reducing the latency associated with data access, cache memory enables HFT systems to respond to market changes with minimal delay. - Scientific Computing and Simulations:

Scientific computing applications, such as climate modeling, molecular dynamics, and astrophysics simulations, require vast amounts of data to be processed and analyzed. Cache memory helps reduce the time needed to retrieve and process this data, allowing scientists to run simulations more efficiently and obtain results faster.

3. Improving System Throughput: Leveraging Cache for Multi-User and Multi-Tasking Environments

System throughput refers to the amount of work or number of tasks that a computing system can handle within a given period. Cache memory plays a crucial role in improving throughput by enabling the CPU to process more instructions without being hindered by slow data access operations. This is especially important in multi-user and multi-tasking environments, where multiple processes or users are competing for system resources.

- Server and Cloud Computing Environments:

In server and cloud computing environments, where multiple users or applications are simultaneously accessing shared resources, cache memory helps balance the load by reducing the frequency of data retrieval operations. This enables the system to handle a higher volume of requests, improving overall throughput and reducing latency for end-users. - Virtualization and Containerization:

Virtualization and containerization technologies allow multiple operating systems or applications to run on the same physical hardware. Cache memory is essential for ensuring that these virtualized environments can operate efficiently, by providing fast access to the data and instructions needed by each virtual machine (VM) or container. This reduces the overhead associated with context switching and resource sharing, improving the performance of virtualized workloads. - Database Systems:

Database systems, particularly those that handle large volumes of transactions or queries, benefit significantly from the use of cache memory. By caching frequently accessed database records, indexes, and query results, the system can reduce the time needed to execute queries and improve overall database performance. This is particularly important for online transaction processing (OLTP) systems, where low latency and high throughput are critical.

Challenges and Considerations in Cache Memory Management

While cache memory provides numerous benefits, it also presents several challenges that must be carefully managed to ensure optimal performance and reliability. These challenges include maintaining cache consistency, managing cache eviction policies, and addressing security concerns related to cache memory.

1. Cache Consistency: Ensuring Data Integrity and Accuracy

Maintaining cache consistency is critical to ensuring that the data stored in the cache accurately reflects the current state of the data in primary storage. Inconsistencies can arise when the data in primary storage is updated, but the corresponding data in the cache is not, leading to the potential for outdated or incorrect information to be served to the CPU or applications.

- Cache Invalidation:

Cache invalidation is a technique used to ensure that stale data is removed from the cache when the corresponding data in primary storage is updated. Invalidation can be triggered by various events, such as writes to the primary storage or changes in the data’s state. By invalidating outdated cache entries, the system ensures that only fresh data is served to the CPU. - Write-Through and Write-Back Policies:

Write-through and write-back are two common policies used to manage how data is written to the cache and primary storage. In a write-through policy, data is written to both the cache and primary storage simultaneously, ensuring consistency but potentially increasing latency. In a write-back policy, data is first written to the cache and only written to primary storage when the cache entry is evicted or updated. Write-back policies can improve performance but require careful management to prevent inconsistencies.

2. Cache Eviction Policies: Managing Limited Cache Capacity

Cache memory has limited storage capacity, so it is essential to implement efficient eviction policies to determine which data should be retained in the cache and which should be discarded when the cache becomes full. These policies are critical for ensuring that the most relevant and frequently accessed data remains in the cache, while less important data is evicted to make room for new entries.

- Least Recently Used (LRU):

The LRU eviction policy removes the data that has not been accessed for the longest period. This approach is based on the assumption that data accessed recently is more likely to be accessed again soon, making it a commonly used policy in cache management. - First In, First Out (FIFO):

The FIFO eviction policy removes the oldest data in the cache, regardless of how frequently it has been accessed. This policy is simple to implement but may not always be optimal, as it does not consider the access patterns of the data. - Least Frequently Used (LFU):

The LFU eviction policy removes the data that has been accessed the least number of times. This approach is useful for ensuring that frequently accessed data remains in the cache, but it may require more complex tracking mechanisms to maintain access counts.

3. Security Concerns: Protecting Cache Memory from Attacks

Cache memory, like other system components, is not immune to security threats. Attackers can exploit vulnerabilities in cache memory to gain unauthorized access to sensitive data or to disrupt system operations. Protecting cache memory from these threats is essential for maintaining the integrity and security of the computing system.

- Cache Poisoning:

Cache poisoning is an attack in which malicious data is injected into the cache, causing the system to serve incorrect or harmful data to the CPU or applications. To prevent cache poisoning, systems must implement validation checks to ensure that data stored in the cache is legitimate and has not been tampered with. - Side-Channel Attacks:

Side-channel attacks exploit the physical characteristics of cache memory, such as timing or power consumption, to infer sensitive information. These attacks can be difficult to detect and prevent, requiring sophisticated countermeasures, such as cache partitioning or randomization, to protect against them. - Data Encryption and Access Controls:

Encrypting data stored in cache memory and implementing strict access controls can help protect sensitive information from unauthorized access. These measures are particularly important in environments where cache memory is shared among multiple users or applications, as they prevent one user or process from accessing the data of another.

Advanced Cache Technologies: The Future of Cache Memory

As computing demands continue to grow, the technology behind cache memory is evolving to meet the needs of increasingly complex and performance-critical applications. Several advanced cache technologies are emerging, promising to further enhance the speed, efficiency, and reliability of computing systems.

1. Non-Volatile Memory (NVM): Revolutionizing Cache Storage

Non-volatile memory (NVM) technologies, such as Intel Optane, are changing the landscape of cache memory by providing persistent storage with speeds approaching that of traditional volatile memory (e.g., DRAM). Unlike traditional cache memory, which loses its contents when power is lost, NVM retains data even in the absence of power. This persistence offers significant advantages in terms of data integrity, recovery, and performance.

- Persistent Caching:

The integration of NVM into cache memory systems allows for the creation of persistent caches that retain their contents across system reboots or power failures. This capability is particularly valuable in mission-critical applications, where data loss due to power outages or system crashes can have severe consequences. - Hybrid Memory Architectures:

Hybrid memory architectures combine traditional volatile memory (e.g., DRAM) with NVM to create a multi-tiered caching system that optimizes both speed and persistence. In these architectures, frequently accessed data is stored in volatile memory for ultra-fast access, while less frequently accessed or critical data is stored in NVM for long-term retention.

2. Intelligent Caching Algorithms: Leveraging AI and Machine Learning

Advancements in artificial intelligence (AI) and machine learning (ML) are enabling the development of intelligent caching algorithms that can predict access patterns and dynamically optimize cache content. These algorithms analyze historical access patterns, usage trends, and other contextual factors to make real-time decisions about which data should be stored in the cache.

- Predictive Caching:

Predictive caching algorithms use machine learning models to anticipate which data is likely to be accessed in the near future. By preemptively loading this data into the cache, these algorithms can reduce cache misses and improve overall system performance. - Adaptive Cache Management:

Adaptive cache management techniques adjust cache allocation and eviction policies based on real-time analysis of system workload and access patterns. These techniques ensure that the cache is always optimized for the current usage scenario, maximizing efficiency and performance.

3. Distributed Caching: Enhancing Performance in Large-Scale Systems

In distributed computing environments, where data is spread across multiple nodes or data centers, distributed caching plays a crucial role in maintaining performance and consistency. Distributed caches store copies of data across various nodes, ensuring that data retrieval is quick and efficient, even in large-scale, geographically dispersed systems.

- Data Consistency in Distributed Caches:

Maintaining data consistency across distributed caches is a significant challenge, particularly in environments with high levels of concurrency and frequent data updates. Techniques such as eventual consistency, quorum-based replication, and conflict resolution are used to manage consistency while minimizing latency. - Scalability and Fault Tolerance:

Distributed caching systems are designed to be highly scalable, allowing them to handle increasing workloads by adding more nodes to the cache network. They are also built with fault tolerance in mind, ensuring that the system can continue to operate even if individual nodes fail or become unavailable. - Technologies and Tools:

Technologies like Apache Ignite, Redis, and Memcached are commonly used to implement distributed caching in modern applications. These tools provide a range of features, including in-memory data storage, replication, sharding, and advanced caching algorithms, making them well-suited for large-scale, high-performance computing environments.

Case Studies: Real-World Applications of Cache Memory

Understanding how cache memory is utilized in real-world applications provides valuable insights into its importance and functionality. The following case studies highlight the critical role of cache memory in some of the most demanding computing environments.

1. Google Search Engine: Achieving Lightning-Fast Query Response Times

Google’s search engine is a prime example of effective cache memory utilization. With billions of queries processed daily, Google relies on sophisticated caching strategies to deliver search results at lightning speed. The search engine uses multiple layers of cache, including CPU cache, disk cache, and distributed cache, to store frequently accessed data, such as popular search results, web page snapshots, and user preferences.

- Query Caching:

Google employs query caching to store the results of frequently searched queries, allowing the search engine to instantly retrieve and display results without having to reprocess the query. This caching mechanism is particularly effective for handling common searches and trending topics. - Web Page Caching:

Google’s web crawlers continuously index and cache copies of web pages, images, and other online content. This cached content is used to generate search results quickly, reducing the load on web servers and improving the user experience. - User-Specific Caching:

Personalized search features, such as search history and location-based results, rely on user-specific caching to deliver tailored search results. By caching user preferences and frequently accessed content, Google can provide a more relevant and responsive search experience.

2. Amazon E-commerce Platform: Enhancing User Experience and Scalability

Amazon’s e-commerce platform handles millions of transactions and user interactions daily, making efficient caching a critical component of its architecture. The platform uses various types of cache memory, including CPU cache, disk cache, and distributed cache, to ensure fast load times, seamless navigation, and scalable performance.

- Product Page Caching:

Amazon caches product pages, images, and user reviews to reduce load times and improve the shopping experience. This caching strategy is particularly important during peak shopping periods, such as Black Friday and Cyber Monday, when the platform experiences a significant increase in traffic. - Session Caching:

User sessions, including shopping carts, wish lists, and browsing history, are cached to provide a consistent and personalized experience across multiple devices. By caching session data, Amazon ensures that users can quickly resume their shopping activities, even if they switch devices or experience interruptions. - Dynamic Content Caching:

Amazon uses dynamic content caching to store frequently accessed data, such as product recommendations, personalized offers, and real-time pricing information. This caching mechanism allows the platform to deliver personalized content to users without compromising performance.

3. Netflix Streaming Service: Delivering High-Quality Video Content Globally

Netflix, one of the world’s leading streaming services, relies heavily on cache memory to deliver high-quality video content to users around the globe. With millions of users streaming content simultaneously, Netflix uses advanced caching strategies to reduce buffering times, ensure consistent video quality, and minimize latency.

- Content Delivery Network (CDN) Caching:

Netflix uses a global network of CDNs to cache video content at various locations close to users. By distributing content across multiple cache servers, Netflix can reduce the distance that data must travel, resulting in faster content delivery and reduced buffering. - Adaptive Bitrate Streaming:

Netflix employs adaptive bitrate streaming to dynamically adjust video quality based on the user’s network conditions. Cache memory plays a crucial role in this process by storing multiple versions of the video content at different bitrates. This allows Netflix to quickly switch between bitrates, ensuring that users receive the best possible video quality with minimal interruptions. - User-Specific Caching:

Netflix caches user-specific data, such as viewing history, recommendations, and personalized content lists, to enhance the user experience. This caching strategy allows Netflix to deliver a tailored streaming experience that meets the unique preferences and viewing habits of each user.

Advanced Cache Optimization Techniques: Pushing the Boundaries of Performance

As technology continues to evolve, new methods for optimizing cache memory are being developed to push the boundaries of system performance even further. The following advanced cache optimization techniques illustrate the ongoing innovations in the field of cache memory management.

- Prefetching AlgorithmsPrefetching is an advanced technique used to anticipate future data requests and load the required data into the cache before it is actually needed. By predicting which data will be requested next, prefetching can significantly reduce cache miss rates and improve overall system performance.

- Hardware Prefetching: Modern processors often include hardware prefetchers that monitor the patterns of memory access and attempt to preemptively load data into the cache. This technique reduces latency by ensuring that the required data is readily available when needed by the CPU.

- Software Prefetching: In addition to hardware prefetching, software prefetching can be implemented by optimizing programs to include prefetch instructions. These instructions hint to the processor which data is likely to be used soon, allowing it to be loaded into the cache in advance.

- Cache CompressionCache compression is a technique used to increase the effective capacity of the cache by compressing the data stored within it. By storing compressed versions of data, more information can fit into the same physical cache space, reducing cache misses and improving hit rates.

- Reduced Miss Rates: Cache compression reduces the likelihood of cache misses by enabling more data to be stored in the limited cache space. This is particularly beneficial for applications that require frequent access to large data sets.

- Decompression Overhead: While cache compression can improve performance, it also introduces some overhead associated with compressing and decompressing the data. Advanced compression algorithms aim to minimize this overhead to ensure that the benefits outweigh the costs.

- Dynamic Cache PartitioningDynamic cache partitioning involves dividing the cache into separate sections that can be allocated to different tasks or processes based on their specific needs. This approach helps optimize cache usage by ensuring that critical tasks receive priority access to cache resources.

- Quality of Service (QoS): Dynamic cache partitioning can be used to provide Quality of Service (QoS) guarantees for high-priority tasks. By allocating dedicated cache space to these tasks, the system can ensure that they receive the resources they need to maintain optimal performance.

- Workload Adaptation: The partitioning of cache can be dynamically adjusted based on the current system workload. For example, during periods of heavy multi-tasking, the cache can be reallocated to ensure that all running processes receive an adequate share of the cache, improving overall system efficiency.

- Cache-Aware ProgrammingCache-aware programming involves writing software that is optimized to take full advantage of the available cache memory. By designing algorithms and data structures that are cache-friendly, developers can significantly improve the performance of their applications.

- Spatial and Temporal Locality: Cache-aware programming emphasizes the principles of spatial and temporal locality to improve cache efficiency. By accessing data in a predictable and sequential manner, programs can maximize cache hits and minimize cache misses.

- Loop Nest Optimization: One common cache-aware technique is loop nest optimization, which involves restructuring loops in a way that ensures data is accessed in a cache-friendly manner. This reduces the number of cache misses and enhances performance, particularly in computationally intensive applications.

Advanced Metrics for Evaluating Cache Memory Performance

Optimizing cache memory requires not only understanding its structure and types but also employing advanced metrics to evaluate its performance effectively. These metrics provide insights into how efficiently the cache memory is functioning and where improvements can be made. Below are the key metrics used to analyze cache memory performance:

- Cache Hit Rate

- Definition: The cache hit rate is the percentage of data requests that are successfully fulfilled by the cache without requiring access to slower storage.

- Importance: A high hit rate indicates that the cache is effectively storing and serving frequently accessed data, reducing latency and improving system performance.

- Optimization Strategies: To improve hit rates, systems can utilize larger cache sizes, intelligent prefetching algorithms, or more efficient data organization.

- Cache Miss Rate

- Definition: The cache miss rate is the percentage of data requests that are not found in the cache, requiring retrieval from slower storage tiers.

- Miss Penalty: Cache misses introduce latency and increase the overall processing time. The cost of a cache miss depends on the time required to retrieve data from main memory or storage devices.

- Mitigation Techniques: Reducing miss rates involves optimizing cache eviction policies (e.g., Least Recently Used or Adaptive Replacement Cache) and employing smarter cache allocation strategies.

- Latency

- Definition: Latency measures the time taken to retrieve data from the cache compared to main memory or secondary storage.

- Impact: Lower latency is critical for real-time applications like gaming, scientific simulations, and high-frequency trading.

- Measurement Tools: Tools such as Intel VTune Profiler and AMD CodeXL can help analyze cache latency and identify bottlenecks.

- Throughput

- Definition: Throughput refers to the volume of data that can be processed or served by the cache within a specific time frame.

- Significance: Higher throughput ensures that the system can handle more instructions and tasks simultaneously, critical for server and multi-user environments.

- Enhancements: Cache partitioning and scaling techniques, including distributed caching, can boost throughput.

- Eviction Rate

- Definition: This metric measures how frequently data is evicted from the cache to make room for new data.

- Correlation: A high eviction rate might indicate insufficient cache size or inefficient cache management policies.

- Evaluation Tools: Software profilers and simulators can help identify and tune the eviction policies for better performance.

- Bandwidth Utilization

- Definition: Bandwidth utilization evaluates how efficiently the cache memory utilizes the data transfer bandwidth between the CPU and main memory.

- Optimization: Techniques like data compression and burst mode access can improve bandwidth efficiency.

- Energy Efficiency

- Definition: This metric assesses the power consumed per unit of data processed by the cache.

- Importance: Especially relevant for mobile devices and data centers, where power efficiency translates directly into longer battery life and reduced operational costs.

- Strategies: Utilizing energy-efficient cache memory designs, such as SRAM (Static Random Access Memory) with low-power modes, can help achieve better energy efficiency.

Tools and Techniques for Cache Performance Analysis

To effectively measure and optimize these metrics, developers and system architects often rely on specialized tools and methodologies:

- Simulation Tools: Tools like GEM5 and Cachegrind allow detailed simulation of cache behavior under different workloads.

- Profiling Software: Profilers such as Intel VTune and ARM Streamline offer real-time monitoring of cache usage and performance.

- Benchmarks: Standardized benchmarks like SPEC CPU and LINPACK help evaluate and compare cache performance across different systems.

By understanding and applying these metrics, IT professionals and organizations can ensure that their computing systems are fully optimized for speed, efficiency, and scalability.

Conclusion: The Future of Cache Memory Technology

As the demand for faster, more efficient computing continues to grow, the importance of cache memory in enhancing system performance becomes increasingly critical. Advances in cache memory technology, such as the development of intelligent caching algorithms, the integration of non-volatile memory, and the implementation of distributed caching systems, promise to further reduce latency, improve throughput, and enhance the overall efficiency of computing systems.

For both end-users and organizations, understanding and leveraging the power of cache memory is essential for optimizing computing environments and achieving competitive advantages. Whether in high-performance computing, cloud services, e-commerce platforms, or streaming services, cache memory will continue to play a central role in shaping the future of technology, driving innovation, and enabling new possibilities in the digital age.